I’m remaking this website in the open. Follow along, if you wish: Writing, RSS, Source

The web is best when it’s fast. A fast web doesn’t just provide a good experience, though that’s certainly important, it also ensures as many people as possible can access it at all. Many people are on slow, unstable connections. By making a site fast, you make it more likely for them to be able to use it.

I purposefully did not do many of the tasks below until I was able to write this post, because I wanted to measure the impact of each change and be able to explain each step. However, some things that help the performance of this site would’ve been too time-consuming to build “the slow way” first only to completely change them to make them faster later. For example:

Please see the Colophon for more details.

Before I do anything, let‘s get a snapshot of the current state of the site, to serve as a baseline. I‘m going to use the excellent WebPageTest and PageSpeed Insights for this.

Note: For all of the WebPageTest snapshots, I‘m testing the homepage of the site, three times, on a Motorola G in Chrome with a ‘3G - slow’ connection. The PageSpeed Insight snapshots are ran once, on the homepage.

| Metric | First View | Repeat View |

|---|---|---|

| PageSpeed (Mobile) | 68/100 | |

| PageSpeed (Desktop) | 71/100 | |

| Speed Index (Mobile) | 2025 | 511 |

| Load Time (Mobile) | 8.666s | 2.864s |

While developing on my home’s broadband connection, I thought the site felt fairly zippy, but these baseline results make it clear that there’s plenty of room for improvement. Here’s a breakdown of the content being loaded:

Typically, the first step in improving web performance is reducing the number of requests. For this relatively simple site, that number is already pretty low. There’s only one image on the page; the other two image requests are tiny and from Google Analytics. The JavaScript is all combined into one bundle except for the Google Analytics package, which is loaded asynchronously. I could embed the font file within the CSS, using base64 encoding, but, as we’ll see later, that would make things worse in other ways.

The next typical step is reducing the size of requests. That definitely applies here, so let’s dig in.

Before this exercise, the avatar image on the home page was a 639px × 639px, 392 Kb PNG. It has a fixed-width of 150px in the design, so I resized to 300px × 300px^[The dimensions are doubled to account for retina screens.], and optimized it while converting to JPEG, which brought it down to 70 Kb.

But I can do more. Not every screen is retina, so I need to serve the smaller, non-retina version by default, then use srcset to serve the retina version to screens that need it:

<img src="/avatar.jpg" srcset="/avatar@2x.jpg 2x" alt="Profile picture of Kyle" />

And the result:

| Metric | First View | Repeat View |

|---|---|---|

| PageSpeed (Mobile) | 90/100 (+22) | |

| PageSpeed (Desktop) | 96/100 (+25) | |

| Speed Index (Mobile) | 2056 (+1.5%) | 498 (-2.5%) |

| Load Time (Mobile) | 8.666s (-16.2%) | 2.864s (+1.01%) |

I’m not sure why the speed index on the first view and load time on the repeat view both went up slightly, but it’s such a small change that I’m not too concerned. Those oddities aside, this simple optimization resulted in big, easy speed wins.

And here’s the new breakdown:

Note: Even though I’m only testing the homepage, I went ahead and did this exercise for all images on the site.

190 Kb of JavaScript is quite a lot, especially since that size is after gzipping. For now, though, I’m going to leave it be, as I would like to see how fast I can make this site while it still runs React client-side. I suspect that I’ll need to remove React to meet my informal goal of a speed index under 1000, but I’ll save that for last.

My CSS is only 6.5 Kb (gzipped). At that size, that’s too small to justify the effort to make it any smaller^[You should always endeavor to keep your CSS as small and simple as you reasonably can. The effort I’m avoiding here is because my styles are already architected in a way to keep them very small. Any further optimization will require either a lot of manual fine-tuning that could break future updates or an automated process using something like PurifyCSS. I intend to implement the latter in a future post.]. There are other reasons being the filesize to make CSS as small as possible, which I’ll cover later in this post.

So far, I’ve only made changes that affected the page load time/size, which correlates to the absolute page speed. But that’s not all that matters. Arguably, perceived performance matters even more, as that affects how fast your site feels.

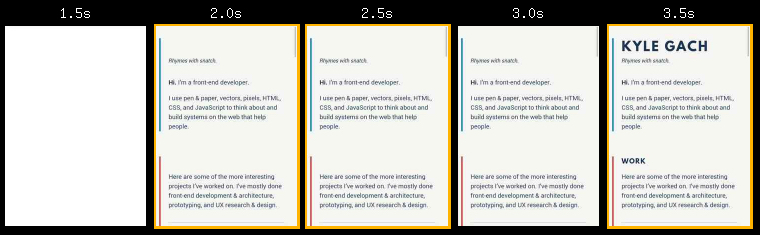

For example, check out this filmstrip view of a portion of the experience waiting for the site to load on 3G:

There’s a full 1.5 seconds between the initial content displaying and the webfont displaying. Worse, because I reference the webfont directly in CSS, via @font-face, there’s a FOIT making the headings completely unreadable until the webfont has finished loading. This is unacceptable.

I’m going to use FontFaceObserver to instead only apply the webfont to headings after it is loaded. While it is loading, they’ll use the same font stack as the body text, changing the FOIT into a FOUT. In some scenarios, this can provide a poor experience just like a FOIT, but I have a couple things going for me:

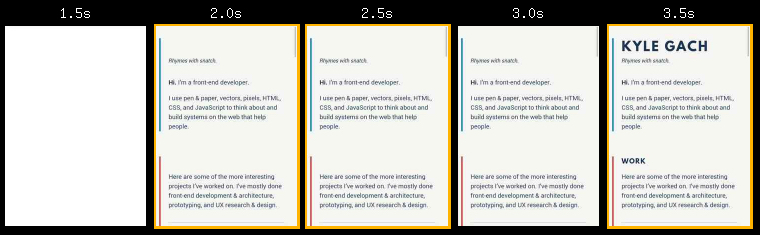

After making the change, the filmstrip now looks like:

Much better! You can see how the headings now display for a brief moment using the same font as the rest of the text, and then switch to the webfont after it has finished loading.

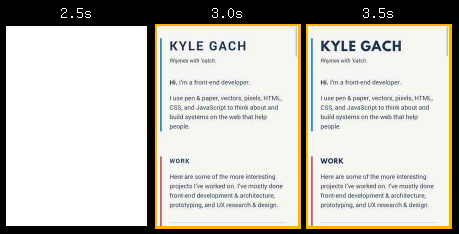

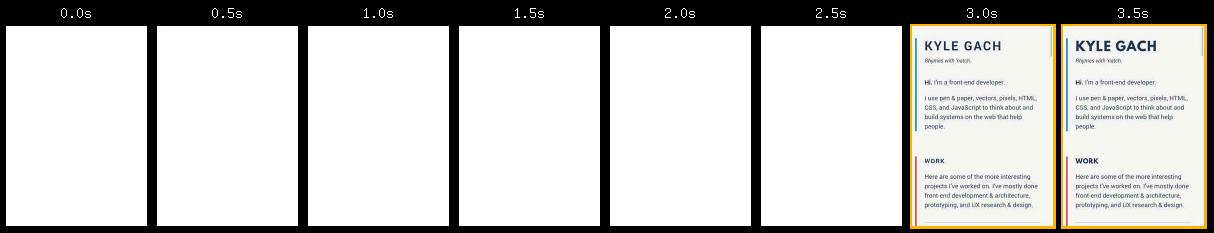

What you can’t see in the filmstrips above is all that time before anything is visible:

Part of that is because it must download the full CSS file before it displays anything. In other words, the CSS is blocking the critical path, which happens regardless of the file’s size. So even though the size is fairly small, it has an outsized effect on the perceived performance of the site.

I’ll cover my approach for solving that issue (and possibly removing React on the client-side) in a future post.